What is OmniGen2?

OmniGen2 is a versatile and open-source generative model designed to provide a unified solution for diverse generation tasks. Built upon the foundation of Qwen-VL-2.5, it features distinct decoding pathways for text and image modalities.

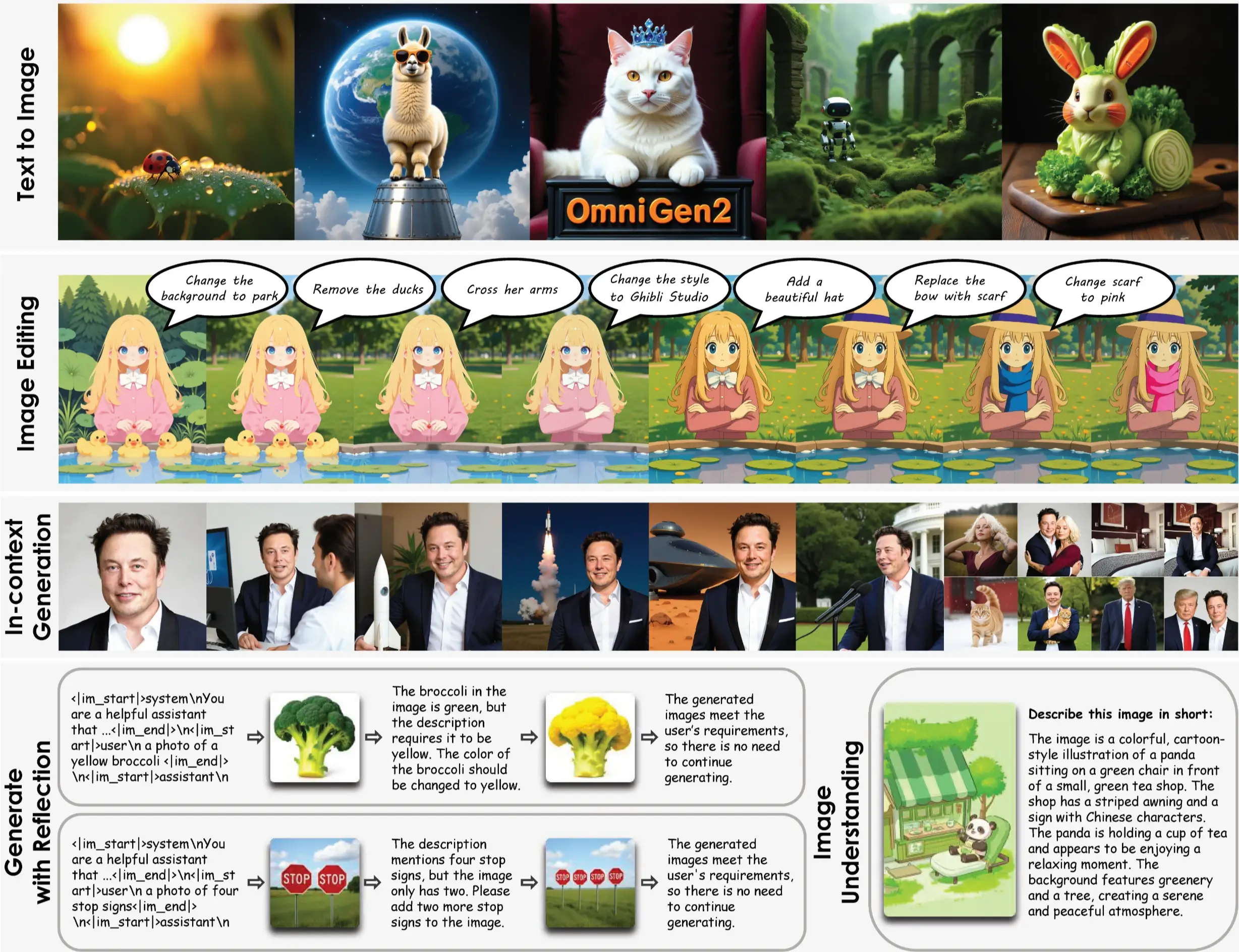

OmniGen2 excels across four primary capabilities:

- Visual Understanding

- Text-to-Image Generation

- Image Editing

- In-context Generation

Image credit: https://vectorspacelab.github.io/OmniGen2/

Overview of OmniGen2

| Feature | Details |

|---|---|

| Model Name | OmniGen2 |

| Developer | VectorSpaceLab |

| Foundation | Qwen-VL-2.5 |

| License | Apache 2.0 (open-source) |

| Requirements | ~17GB VRAM (RTX 3090 or equivalent) |

| Primary Tasks | Visual Understanding, Text-to-Image, Image Editing, In-context Generation |

| Framework | Unified multimodal generation |

| Hosting | GitHub & Hugging Face |

How to Use OmniGen2

Option 1: Online Demo (Free but Limited)

- Go to the official GitHub repo.

- Click "Online Demos" and select a Hugging Face Space.

- Upload an image and start editing.

Note: Free online usage has daily limits.

Option 2: Install Locally (Unlimited Offline Use)

System Requirements

- Minimum: 3GB VRAM (slow on CPU)

- Recommended: 17GB+ VRAM for smooth performance

Step-by-Step Installation

- Install Git (Skip if Already Installed)

Download Git from git-scm.com and run the installer with default settings. - Clone the OmniGen2 Repository

Open Command Prompt (cmd), navigate to your desired folder, and run:git clone https://github.com/omnigen2/omnigen2.git - Install Conda (Recommended)

Download Miniconda for Python 3.11 and run the installer.

Add Conda to your system PATH (see installer instructions). - Create a Virtual Environment

In Command Prompt, navigate to the OmniGen2 folder:cd omnigen2 conda create -n omnigen2 python=3.11 conda activate omnigen2 - Install PyTorch (CUDA Version)

Check your CUDA version:

Install the matching PyTorch version (for CUDA 12.4):nvcc --versionpip install torch torchvision --index-url https://download.pytorch.org/whl/cu124 - Install Required Packages

pip install -r requirements.txt - (Optional) Install Flash Attention (Faster Generations)

Download a pre-built wheel from Hugging Face and install it:pip install [path_to_downloaded_wheel] - Install Gradio (For the Web Interface)

pip install gradio - Launch OmniGen2

python app.py

What Can OmniGen2 Do?

Omnigen2 allows you to edit images in ways that were previously only possible with professional tools like Photoshop—except here, you just type what you want, and the AI does the rest.

Demo Gallery - See OmniGen2 in Action

Image credit: vectorspacelab.github.io/OmniGen2

Basic Image Edits

Replace Objects in an Image

- Upload an image of a cat and an apple.

- Type: "Replace the apple with the cat."

- The AI not only swaps them but also adjusts the cat's white balance to match the background.

Modify Poses & Interactions

- Upload a photo of a man and a woman.

- Type: "Make the man and woman kiss and hug."

- The AI generates a natural-looking interaction.

Change Backgrounds & Scenes

- Upload a reference photo of a character.

- Type: "Place her in a cozy café sitting in front of a laptop."

- The AI preserves her appearance while placing her in the new setting.

Advanced Edits

You can also perform multiple sequential edits:

- Start with an initial image.

- Change the background to a park.

- Remove unwanted objects (like ducks).

- Adjust poses (e.g., "Make her cross her arms.").

- Apply artistic styles (e.g., "Change to Ghibli style.").

- Add or modify accessories (e.g., "Add a hat." or "Replace her bow with a scarf.").

Requirements to Run OmniGen2

Before getting started, here's what you'll need:

- ~17GB VRAM (RTX 3090 or equivalent)

- Python 3.11+ environment

- PyTorch 2.6.0+ with CUDA support

- Optional: CPU offload for lower VRAM

Key Features of OmniGen2

Visual Understanding

Inherits robust image interpretation abilities from Qwen-VL-2.5 foundation.

Text-to-Image Generation

Creates high-fidelity, aesthetically pleasing images from textual prompts.

Instruction-guided Editing

Performs complex, instruction-based image modifications with precision.

In-context Generation

Flexibly combines diverse inputs to produce novel and coherent outputs.

Unified Framework

Single model handles multiple tasks without requiring additional modules or adapters.

Interactive Demo

Technical Architecture

Dual Decoding Pathways

Separate specialized pathways for text and image generation with unshared parameters.

Decoupled Image Tokenizer

Enhanced efficiency and specialization through independent image processing.

System Requirements & Setup

This guide covers installation on Ubuntu with an NVIDIA RTX A6000 (48GB VRAM), but you can run this on any system with a compatible GPU and Python environment.

Note: If you don't own a GPU, consider using cloud GPU rental services like MastCompute which offers GPU rentals at reasonable prices with discount codes often available.

Step 1: Create a Virtual Environment

Open your terminal and start by setting up a virtual environment:

python3 -m venv omnigen2_env source omnigen2_env/bin/activateStep 2: Clone the OmniGen2 Repository

Run the following command to get the source code:

git clone https://github.com/facebookresearch/omnigen2.git cd omnigen2Step 3: Install Dependencies

Install the required Python packages (this will take a few minutes depending on your internet speed and system performance):

pip install -r requirements.txtStep 4: Launch the Application

Once the installation is complete, launch the demo:

python app.pyThis command will:

- Download the model weights automatically

- Launch a web UI on your localhost:7860

- Open your browser and go to:

http://localhost:7860

You'll now see the OmniGen2 interface live and ready to use!

Performance Specifications

Memory Usage

- Standard Operation:~18GB VRAM

- Processing Time:~2 minutes (50 steps)

- Optimization:Good memory efficiency

Default Settings

- Sampling Steps:50 (default)

- Configuration:Works well out of box

- Customization:Fully adjustable

Performance Optimization

Memory Optimization Options:

- enable_model_cpu_offload: Reduces VRAM usage by 50% with minimal speed impact

- enable_sequential_cpu_offload: Minimizes VRAM to under 3GB (slower performance)

- max_pixels: Controls image resolution to manage memory usage

Quality Controls:

- text_guidance_scale: Controls adherence to text prompts

- image_guidance_scale: Balances reference image influence (1.2-3.0 recommended)

- negative_prompt: Specify what to avoid in generation

Applications and Use Cases

Creative Design

Generate artwork, concept designs, and visual prototypes from text descriptions.

Content Creation

Create social media content, marketing materials, and visual storytelling assets.

Image Enhancement

Edit existing images with natural language instructions for professional results.

Product Visualization

Create product mockups and variations for e-commerce and marketing.

Research and Development

Explore multimodal AI capabilities and develop new applications.

Educational Tools

Create visual learning materials and interactive educational content.